When I was asked to do a blog post for VR audio, my first thought was not to dive into any 3d audio technology like ambisonics or binaural listening. This is directed at the smaller developers for whom sound has been something you can address very late in the development process. I would like to present a simpler concept. In game audio and in VR, everything is on, all the time, everywhere. This central concept is imperative when addressing spawning, pan, and mixing.

Gaming Before VR Audio:

When I was working on Call of Duty: World at War, we really swung for the fences as far as 3d audio. Given, it was one horizontal plane in surround sound, but we really went for it as far as “This object is making its own sound at this spot with this fade over distance.” Very little was done with a static background. Rather than doing a general mix of things in the world, individual mono sounds were attached to the world objects in the game. If the object was a fire, a looping fire sound would be on that spot. The beautiful thing about this method is there is no mix panning. The sound is where the object is. The problem with this method is the sound is ALWAYS where the object is.

When film audio mixers pan and mix things, it has a lot to do with what you see on the screen, which under the best circumstances the front 90 degrees. A loud sound outside this field of vision will often be manually turned down severely even at 90 degrees right, any may even be gone at 180 degrees from the camera direction.

Sound Placement – The Double Edged Sword:

In the video game world, having the sound where the object ACTUALLY IS can be a serious double edged sword. Many times designers spawn in a loud object just off screen because you can’t see it yet. This is a problem with (actual example) a helicopter simply because you can hear helicopters coming. A designer was angry at me because the helicopter sound started suddenly when he spawned it 100 virtual yards from the listener behind a rock. “Well can’t you just fade it in?” The short (and utterly incorrect) answer is yes, I can, but helicopters don’t just fade in over three seconds in the world. They come flying in for miles, probably changing course on the way. I could make a premade sound for this long approach, but what if the player suddenly looks at it? Wow. There’s a sound there with no helicopter. The only remaining options are to either premake the incoming sound and camouflage the approach or just damn well make the helicopter model fly the approach pattern.

Pan and Volume

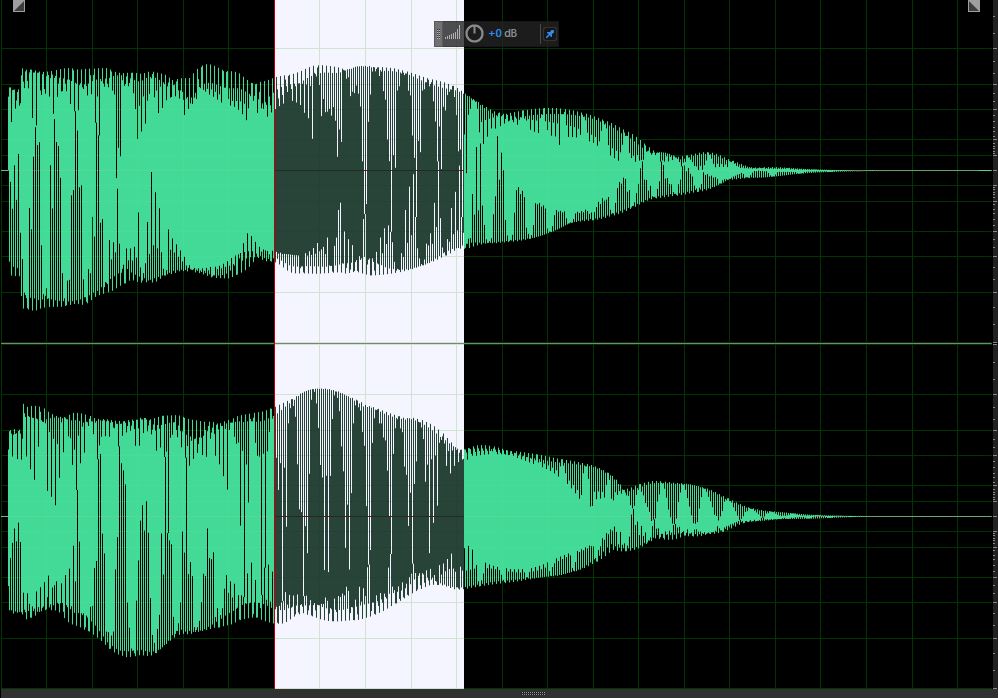

That covers the problem of spawning, but what about pan and volume? The helicopter is probably a bad example simply because they are generally loud and are most often involved in the story/action. What about a simple object like a generator? It’s loud, but not overly important. This is precisely the type of sound I was speaking about in the film audio paragraph. Generators are absolutely BRÜTAL in a mix because they eat up a ton of the sonic spectrum. They consume dialogue, music, and anything else that enters their event horizon. They can be off screen, but if they are right behind you in-game, they are still full volume and trying to murder your mix. I was at the Game Developers Conference at the Crytec presentation when the solution to this was laid before me. They used FMod, an audio engine that does very well at manipulating sounds based on incoming game data. They were able to set parameters such that all sounds outside 90 degrees of the players view direction were dropped by (x)dB when they reach (y)degrees off center. This meant if you’re looking at the generator, it’s full volume. If you look away, it is decreased. This was overridable on a per-sound basis for important game related sounds like the helicopter above.

Sound is No Longer an Afterthought

This approach is very common and old news for the big studios by now. Generally the small studios and indies have only done enough sound to cover their product and it is an afterthought at best. With the rise of VR, however, audio has increased prominence all of a sudden. Last year’s GDC had several audio seminars on the VR track that were (gulp) well attended. My thought on this is that games before VR have always been relegated to a screen. As long as the sound is coming pretty much from the screen, it’s good enough. Now we have careful crafting of a 3d visual illusion that can be undone by non-directional audio. Large studios can already handle this in the form above by expanding the horizontal sound horizon to a spherical one using an ambisonic output. The small and indie studios I have seen are largely struggling with it because sound has been something you can add at the very end and not engineer it too much. If you want an acceptable product in the VR world, however, this needs to be addressed in the design phase and be addressed technically on day one. (Maybe two)

Assuming you’re using an entry level engine like Unity, yes, you can turn on 3d audio for a mono source, but guess what? Assuming you are within your minimum range, Everything is on, all the time, everywhere. You are going to have to reconcile your field of vision with what a GDC presenter (Sorry. Can’t find his name) called the Field of Audition. Field of vision = things you can see. Field of Audition = things you can hear. Failure to do this early on is the quickest way to sabotage your own product.

On behalf of my fellow sound engineers, I hope this post will inspire small developers to take sound and its engineering into consideration sooner in their projects. Audio is no longer the paint on the walls. It must be part of the foundation.

Gary Spinrad

Audio Director — Zero Transform

Just because I have a short attention span doesn’t mean I